“Explainable AI: Unveiling the Black Box and Building Trust

Artikel Terkait Explainable AI: Unveiling the Black Box and Building Trust

- The Astonishing World Of Image Recognition: From Pixels To Perception

- The Rise Of The Intelligent Assistant: Navigating The World With AI By Your Side

- The Rise Of The Digital Assistant: From Science Fiction To Everyday Reality

- Ambient Intelligence: Weaving Technology Seamlessly Into The Fabric Of Our Lives

- Decoding Emotions: A Deep Dive Into Sentiment Analysis

Table of Content

Video tentang Explainable AI: Unveiling the Black Box and Building Trust

Explainable AI: Unveiling the Black Box and Building Trust

Artificial Intelligence (AI) is rapidly transforming industries, from healthcare and finance to transportation and entertainment. While AI models, particularly those based on deep learning, are achieving remarkable performance in complex tasks, they often operate as "black boxes." Their internal decision-making processes are opaque, making it difficult to understand why a specific prediction was made. This lack of transparency raises concerns about trust, accountability, and fairness, hindering the widespread adoption of AI in critical applications. Enter Explainable AI (XAI), a field dedicated to developing AI models and techniques that provide human-understandable explanations for their decisions.

The Need for Explainability:

The rise of powerful yet inscrutable AI systems has highlighted several critical issues:

- Lack of Trust: If users don’t understand how an AI system arrives at a decision, they are less likely to trust its recommendations, especially in high-stakes scenarios like medical diagnoses or loan approvals.

- Bias and Discrimination: Black box AI models can perpetuate and even amplify existing biases in training data, leading to unfair or discriminatory outcomes. Without explainability, these biases can be difficult to detect and correct.

- Accountability and Responsibility: When an AI system makes an error or causes harm, it’s crucial to understand why the error occurred. Explainability allows us to identify the root causes of failures and hold the appropriate parties accountable.

- Regulatory Compliance: Increasingly, regulations like the GDPR require organizations to provide explanations for automated decisions that significantly impact individuals.

- Improved Model Performance: Understanding the reasoning behind an AI model’s predictions can help identify weaknesses and areas for improvement, leading to better overall performance.

- Knowledge Discovery: XAI can help uncover new insights and relationships within data that might not be apparent through traditional analysis.

What is Explainable AI (XAI)?

Explainable AI (XAI) is a set of methods and techniques designed to make AI systems more understandable to humans. It aims to provide insights into how AI models work, why they make specific decisions, and what factors influence their predictions. XAI is not about creating perfect transparency (which may be impossible or undesirable), but rather about providing sufficient information for users to understand, trust, and effectively interact with AI systems.

Key Characteristics of Explainable AI:

- Interpretability: The ability to understand the relationship between inputs and outputs of a model. A highly interpretable model is easy to understand and reason about.

- Explainability: The ability to provide human-understandable explanations for a model’s decisions. This goes beyond simply understanding the model’s structure; it involves explaining why a particular prediction was made in a specific instance.

- Transparency: The degree to which the inner workings of a model are visible and understandable. A transparent model allows users to see how the data is processed and transformed.

- Trustworthiness: The degree to which users can rely on the accuracy, reliability, and fairness of an AI system. Explainability contributes to trustworthiness by allowing users to assess the model’s behavior and identify potential issues.

Different Approaches to Explainable AI:

XAI methods can be broadly categorized into two main types:

Intrinsic Explainability: This involves building inherently interpretable models. These models are designed from the ground up to be transparent and easy to understand. Examples include:

- Linear Regression: A simple and well-understood model where the relationship between inputs and outputs is linear and can be easily visualized.

- Decision Trees: Models that represent decisions as a tree-like structure, making it easy to follow the decision-making process.

- Rule-Based Systems: Systems that use a set of explicit rules to make decisions, providing clear and understandable logic.

- Generalized Additive Models (GAMs): Models that combine multiple linear or non-linear functions to predict an outcome, offering a balance between accuracy and interpretability.

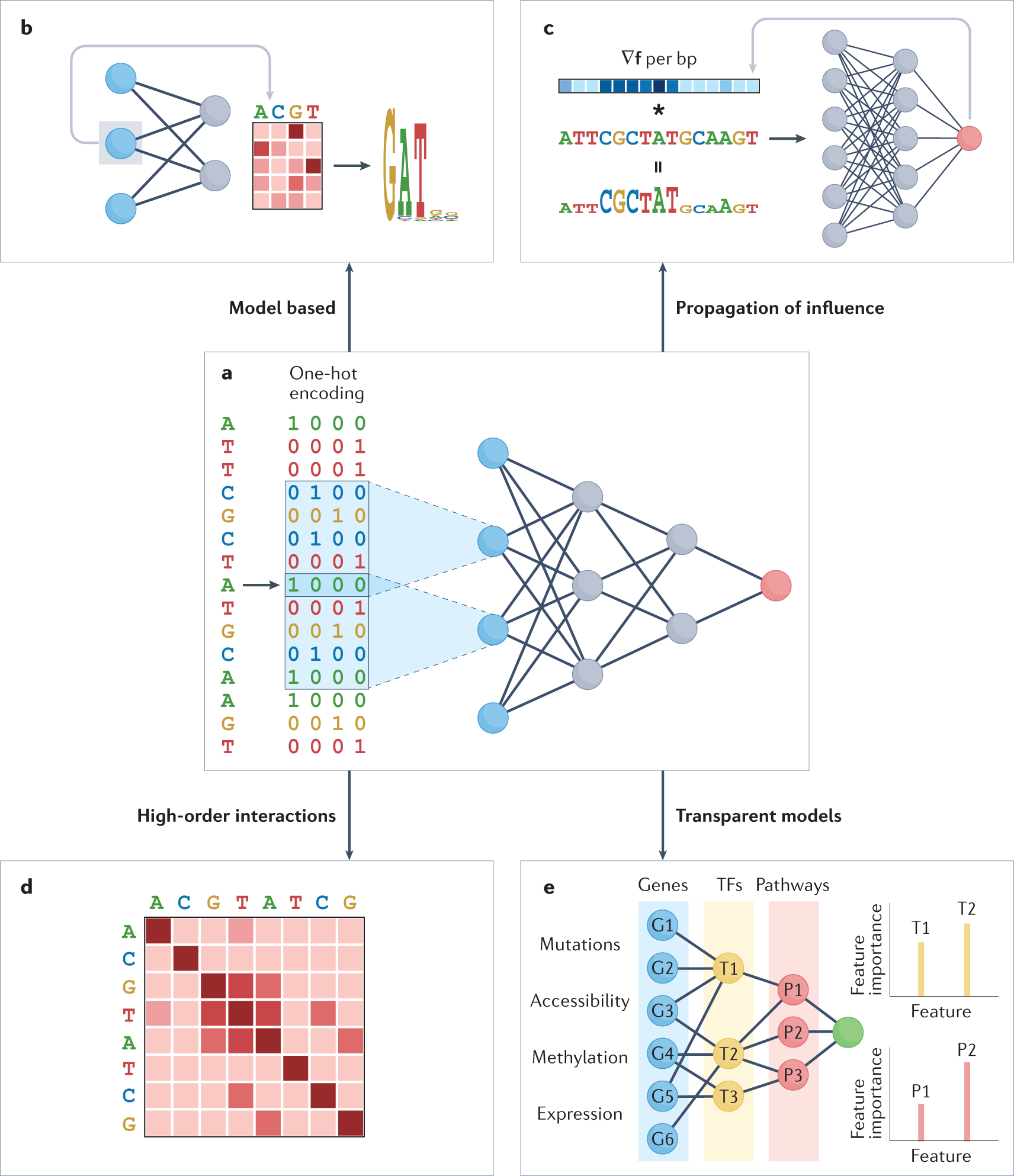

Post-hoc Explainability: This involves applying techniques to explain the decisions of already trained, potentially complex, "black box" models. These techniques provide explanations after the model has been built. Examples include:

- LIME (Local Interpretable Model-agnostic Explanations): LIME approximates the behavior of a complex model locally around a specific prediction by creating a simpler, interpretable model. It highlights the features that are most important for that particular prediction.

- SHAP (SHapley Additive exPlanations): SHAP uses game theory to assign a value to each feature based on its contribution to the prediction. It provides a consistent and comprehensive explanation of feature importance.

- Attention Mechanisms: In deep learning models, attention mechanisms highlight the parts of the input that the model is focusing on when making a prediction. This provides insights into which features are most relevant.

- Rule Extraction: Techniques that attempt to extract a set of rules from a trained model, allowing users to understand the model’s logic in a more human-readable format.

- Counterfactual Explanations: These explanations identify the smallest changes to the input that would lead to a different prediction. They help users understand what factors are critical for a specific outcome.

Evaluating Explainability:

Evaluating the effectiveness of XAI techniques is crucial. Several metrics and approaches are used:

- Human Subject Studies: Involving users to assess the understandability and usefulness of explanations.

- Functionality Metrics: Measuring how well explanations help users perform tasks, such as identifying errors or making informed decisions.

- Faithfulness Metrics: Assessing how accurately explanations reflect the actual decision-making process of the model.

- Compactness Metrics: Evaluating the simplicity and conciseness of explanations.

- Trust Metrics: Measuring the degree to which explanations increase user trust in the AI system.

Challenges and Future Directions:

Despite the progress in XAI, significant challenges remain:

- The Trade-off Between Accuracy and Explainability: Complex models often achieve higher accuracy than simpler, interpretable models. Finding the right balance between accuracy and explainability is a key challenge.

- Scalability: Applying XAI techniques to large and complex datasets can be computationally expensive.

- Contextualization: Explanations need to be tailored to the specific context and audience. A technical explanation might be appropriate for an expert, while a simpler explanation is needed for a layperson.

- Causality vs. Correlation: XAI techniques often identify correlations between features and predictions. However, it’s important to distinguish between correlation and causation.

- Adversarial Explainability: Explanations can be manipulated to mislead users or hide biases. Developing robust and reliable explanations is crucial.

- Standardization: A lack of standardized metrics and evaluation methods makes it difficult to compare different XAI techniques.

Future research in XAI will focus on:

- Developing more scalable and efficient XAI techniques.

- Creating more context-aware and personalized explanations.

- Improving the robustness and reliability of explanations.

- Developing standardized metrics and evaluation methods for XAI.

- Integrating XAI into the AI development lifecycle.

- Exploring the ethical and societal implications of XAI.

Conclusion:

Explainable AI is essential for building trust, accountability, and fairness in AI systems. By providing human-understandable explanations for AI decisions, XAI empowers users to understand, validate, and effectively interact with AI. While challenges remain, the ongoing research and development in XAI are paving the way for a future where AI is not just powerful, but also transparent and trustworthy. As AI becomes increasingly integrated into our lives, XAI will play a critical role in ensuring that AI benefits all of humanity.

FAQ:

Q: What is the difference between interpretability and explainability?

A: Interpretability refers to the degree to which a human can understand the cause and effect relationships within a model. Explainability goes further and provides human-understandable reasons for specific decisions made by the model. A model can be interpretable but not very explainable, and vice versa.

Q: Is XAI only important for high-stakes applications?

A: While XAI is particularly crucial for high-stakes applications like healthcare and finance, it’s also valuable in other areas. Understanding why an AI system makes a recommendation, even for something as simple as a product suggestion, can improve user trust and satisfaction.

Q: Are interpretable models always better than black box models?

A: Not necessarily. Black box models often achieve higher accuracy than interpretable models, especially in complex tasks. The choice between an interpretable model and a black box model depends on the specific application and the importance of explainability.

Q: Can XAI techniques reveal biases in AI models?

A: Yes, XAI techniques can help identify biases in AI models by revealing which features are most influential in making predictions. If certain features related to sensitive attributes (e.g., race, gender) are found to be disproportionately influential, it may indicate bias.

Q: How do I choose the right XAI technique for my application?

A: The choice of XAI technique depends on several factors, including the type of model, the complexity of the data, and the specific goals of the explanation. Some techniques, like LIME and SHAP, are model-agnostic and can be applied to a wide range of models. Others, like attention mechanisms, are specific to certain types of models (e.g., deep learning models). It’s often necessary to experiment with different techniques to find the one that provides the most useful and informative explanations.

Q: Is XAI a mature field?

A: While significant progress has been made, XAI is still a relatively young and rapidly evolving field. There are many open research questions and challenges to be addressed.

Q: Does XAI guarantee fairness in AI systems?

A: No, XAI doesn’t guarantee fairness. It helps identify potential biases and understand the factors that influence predictions, but it’s ultimately up to developers and stakeholders to take action to mitigate biases and ensure fairness. XAI is a tool to help achieve fairness, not a replacement for ethical considerations and responsible AI development practices.

Q: Where can I learn more about XAI?

A: There are many resources available online, including research papers, tutorials, and open-source XAI libraries. Universities and research institutions also offer courses and workshops on XAI. Searching for "Explainable AI" or "XAI" on Google Scholar or arXiv will provide access to relevant research.

Q: Will XAI eventually eliminate the need for human oversight in AI systems?

A: No, XAI is not intended to eliminate human oversight. Instead, it’s meant to empower humans to better understand and interact with AI systems, enabling them to make more informed decisions and ensure that AI is used responsibly. Human oversight will remain crucial, especially in high-stakes applications where ethical considerations and accountability are paramount.